'Hearing aid' picks out voices in a crowd

Posted by CENTURY HEARING

The next generation of hearing aids that unscramble multiple voices just like the human brain could be on its way.

Artificial intelligence researchers have long struggled to solve 'the cocktail party problem' of picking apart singular voices in a cacophony of speakers.

But now researchers in the US have built a prototype that can tell the difference between three different voices speaking simultaneously with 97 per cent accuracy.

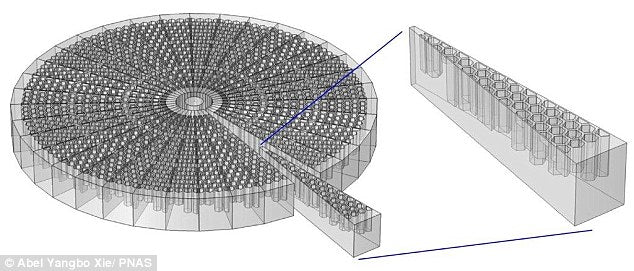

The device can work out which direction a voice is coming from, thereby separating multiple voices. Specially built metamaterials with a hexagonal structure are used to subtly alter sound waves entering the device

The invention is a 3D-printed device that can tease apart multiple voices without the need for sophisticated electronics.

The device consists of 36 plastic tunnels arranged in a disc with a microphone at the centre.

Each passage alters sound waves that enter it in a slightly different way, which make them easy for a computer to pick them apart.

The inside of the tubes are made from a metamaterial - an artificial substance built specifically to affect sound waves bouncing off it.

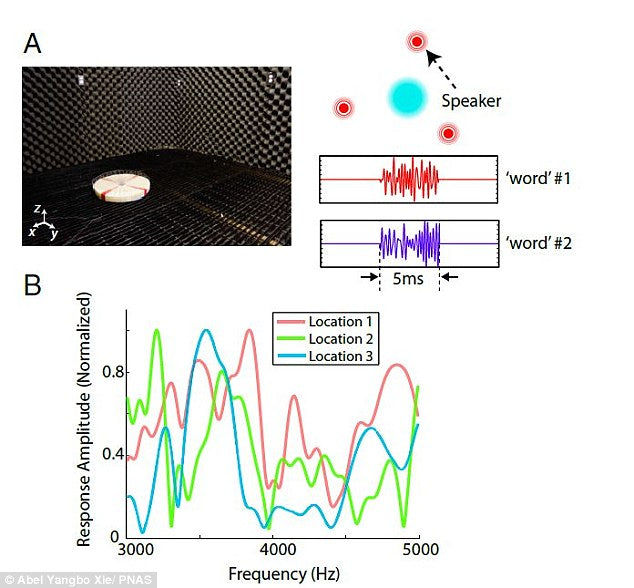

The device can then identify individual voices and sounds by working out which direction they are coming from.

The process was compared to the sound made when you blow into the tops of bottles containing water by senior author Steven Cummer of Duke University, North Carolina.

When you blow into the top of a bottle it makes a certain note depending on how much water the bottle contains, Cummings told Nature.

Each tube contains hexagons that bounce sound, altering it in a different way, similar to if you have multiple bottle with different amounts of water in them.

While the human ear is unable to notice the subtle changes made to the sound waves by the chambers, a computer algorithm can almost always tell which direction the voice is coming from.

'Our proposed multispeaker listening system functionally mimics the selective listening capability of human auditory systems,' wrote the researchers in a paper published in Proceedings of the National Academy of Sciences.

The device identifies individual voices and sounds by working out which direction they are coming from. It does this using an array of 36 'waveguides' arranged in a circle that each subtly alter sound waves (illustrated)

'The system employs only a single sensor, yet it can reconstruct the segregated signals with high fidelity.

'The device is also very simple and robust, as the passive metamaterial structure modulates the signal and, other than the microphone, no electronic or active components are used.'

One obstacle to using the device as a hearing aid is its size - the disc for the prototype is currently 16 inches wide (40 cm).

After separating the sound waves entering the chambers, a computer algorithm can - in 97 per cent of cases - tell which direction the voice is coming from

'It is this big in order to provide a full 360 degrees of coverage' Cummer told The Times.

'The key step for a lot of different applications is shrinking the device by a factor of ten or more. We are actively exploring approaches to try to do this.'

In an earlier study, scientists discovered how the human brain separates different voices in a crowded room.

Brain waves are shaped in a way that allows the brain to selectively track sound patterns from the speaker of interest while simultaneously ignoring other voices.

Using recordings of brain activity of epilepsy patients, researchers Dr. Schroeder and Dr. Elana Zion Golumbic of Columbia University discovered what happens in the brain when paying attention to one of many voices.

In the auditory cortex, brain waves reflect both attended and ignored speech, but attended speech generates higher amplitudes.

However, in regions of the brain involved in higher-order processing such as language and attention control, representation of attended speech was clear, while that of ignored speech was not detectable.

They also found that the higher order representation of these brain waves was refined as a sentence unfolds.

Read more: http://www.dailymail.co.uk/sciencetech/article-3193504/Hearing-aid-picks-voices-crowd-Device-mimics-brain-processes-sound-using-cocktail-party-effect.html#ixzz3k94719hS

Follow us: @MailOnline on Twitter | DailyMail on Facebook

SHARE: